Val conf = new SparkConf().setAppName("WASBIOTest") And writes the output to /HVACOut under the default storage container for the cluster. Retrieves the rows that only have one digit in the sixth column.

Intellij export jar code#

This code reads the data from the HVAC.csv (available on all HDInsight Spark clusters). Replace the existing sample code with the following code and save the changes. You'll then be returned to the project window.įrom the left pane, navigate to src > main > scala >, and then double-click App to open App.scala. Select the Import Maven projects automatically checkbox. For those dependencies to be downloaded and resolved automatically, you must configure Maven.įrom the File menu, select Settings to open the Settings window.įrom the Settings window, navigate to Build, Execution, Deployment > Build Tools > Maven > Importing.

Intellij export jar update#

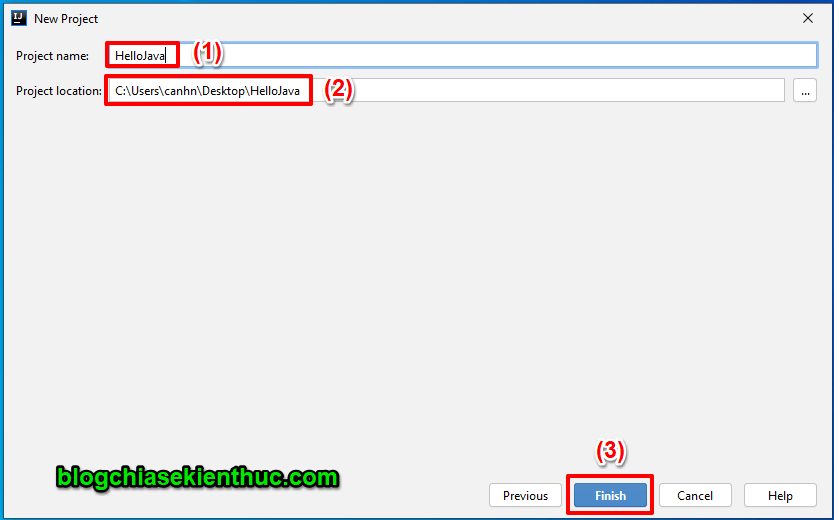

In the later steps, you update the pom.xml to define the dependencies for the Spark Scala application. You don't need this file for the application. Right-click MySpec, and then select Delete. Once the project has imported, from the left pane navigate to SparkSimpleApp > src > test > scala > com > microsoft > spark > example. The project will take a few minutes to import. Verify the project name and location, and then select Finish. Verify the settings and then select Next. The following values are used in this tutorial: Provide relevant values for GroupId, and ArtifactId. This archetype creates the right directory structure and downloads the required default dependencies to write Scala program.Įxpand Artifact Coordinates. Select the Create from archetype checkbox.įrom the list of archetypes, select :scala-archetype-simple. and navigate to the Java installation directory. This example uses Spark 2.3.0 (Scala 2.11.8). If the Spark cluster version is earlier than 2.0, select Spark 1.x. The creation wizard integrates the proper version for Spark SDK and Scala SDK. This field will be blank on your first use of IDEA. In the New Project window, provide the following information: Property

Intellij export jar install#

Select Install for the Scala plugin that is featured in the new window.Īfter the plugin installs successfully, you must restart the IDE. On the welcome screen, navigate to Configure > Plugins to open the Plugins window. See Installing the Azure Toolkit for IntelliJ.ĭo the following steps to install the Scala plugin: This article uses IntelliJ IDEA Community 2018.3.4.Īzure Toolkit for IntelliJ. This tutorial uses Java version 8.0.202.Ī Java IDE. For instructions, see Create Apache Spark clusters in Azure HDInsight. Use IntelliJ to develop a Scala Maven applicationĪn Apache Spark cluster on HDInsight.

0 kommentar(er)

0 kommentar(er)